How To Check If It’s a Weekday in Azure Data Factory and Synapse Analytics

In Azure Data Factory and Azure Synapse Analytics, there are objects called “activities”, which run inside of “pipelines”, which are called by “triggers”.

Sometimes you need activities inside of a pipeline to only run on certain days of the week, even though the larger pipeline trigger runs daily. Here’s how to check if the current day is a weekday using Microsoft’s expression language, which is used in both ADF and Synapse pipelines.

Doing the Needful

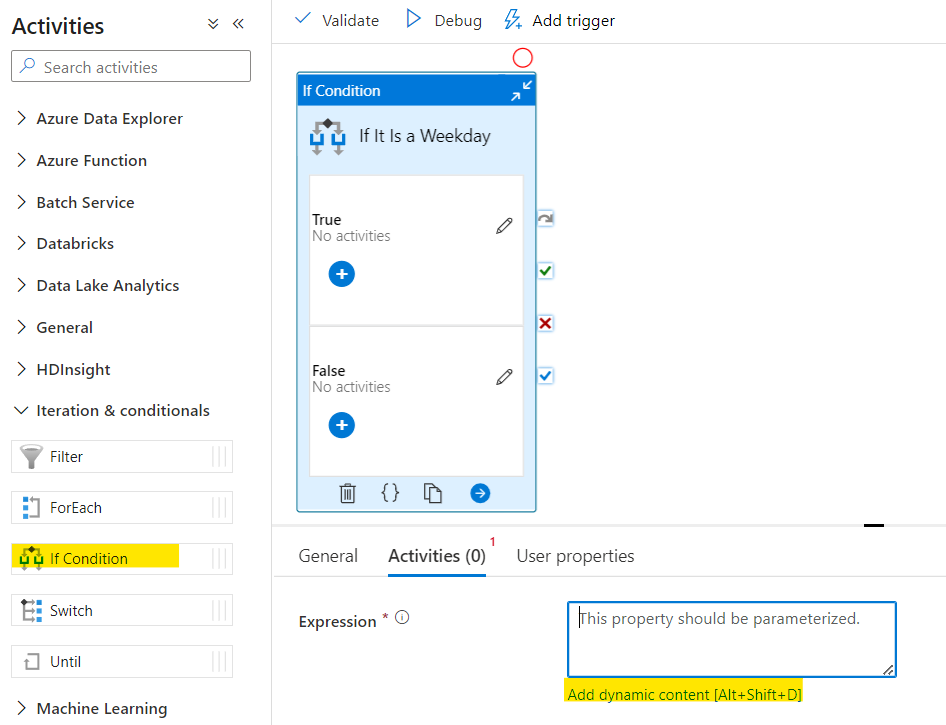

First, create an If condition in your pipeline. Next, click on the Add dynamic content button.

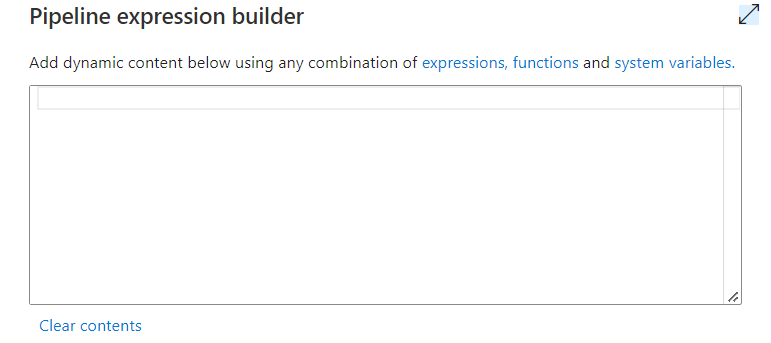

You should now be in the Pipeline Expression Builder menu.

Using these functions, you can write the code below which will return true if the current day is a weekday. Make sure to choose your desired time zone, since the day of the week varies based on where one is in the world. Options on this page.

@contains(

createArray(1, 2, 3, 4, 5),

dayOfWeek(convertFromUtc(utcNow(), 'Pacific Standard Time'))

)

Per the documentation for dayOfWeek() found here, it returns an integer from 0 to 6 representing the day of the week, with Sunday starting at 0. Therefore, 1-5 represents the weekdays Monday thru Friday.

An alternative way of writing this code is below. I find it less attractive, as it relies on the often perilous practice of string comparisons. Why compare strings when you can compare integers? Not recommended, but it works.

@contains(

'12345',

string(dayOfWeek(convertFromUtc(utcNow(), 'Pacific Standard Time')))

)

Solution Tools

This solution utilizes the expression functions below provided by Microsoft in Azure Data Factory and Synapse Analytics.

contains(): Check if a collection contains a value. Details here.createArray(): Make an array ad hoc. Details here.dayOfWeek(): Get an integer value denoting the current day of the week. Details here.convertFromUtc(): Convert a UTC timestamp to a different time zone. Details here.utcNow(): Get the current UTC Time. Details here.string(): Return the string version of a value. Details here.

A Closing Soliloquy

I’ve got a question for any ADF or Synapse experts out there. Let’s say I needed to find out if it’s a weekday in several different pipelines across my data factory or Synapse workspace. In a normal programming language, I’d write a function that takes a date as a parameter and returns a Boolean letting the caller know if it’s a weekday. A basic, reusable, self-contained piece of code. I’d import the module with the function wherever needed, and I’d never have to rewrite that logic again.

How would one accomplish the same modularity in Azure Data Factory or Synapse Analytics? The goal is to avoid having to copy and paste the same expression code all over the place. Here are some of my ideas.

Option 1: You write a pipeline that takes in the date as a parameter and returns a Boolean that tells you if it’s a weekday or not.

Bad news, you actually can’t do this. Azure Data Factory and Synapse pipelines don’t allow for user-defined return values (update: they do now) unless you go through some strange workarounds, or utilize Synapse notebooks, which seems like Super Saiyan level overkill for the simple task of determining if it’s a weekday or not.

Option 2: You call all pipelines from a parent that precalculates values needing reuse and passes them down to children.

This is a pattern my team is actively using to work around the fact that Azure Data Factory has global parameters, but Azure Synapse Analytics does not. I suppose one could extend this pattern to accomplish a form of code reuse when the only goal of your function is to calculate a value. You’d calculate all your constant values upfront and pass them to child pipelines as parameters. This gets smelly fast if you have 1 parameter for every value in need of reuse. An easy workaround for that is to store your properties in a JSON object and pass that 1 object down to child pipelines. For example, consider this JSON object.

{

"isTodayAWeekday": true,

"isTodayAHoliday": false,

"isDieHardAChristmasMovie": true,

"isThorStrongerThanHulk": maybe

}

If you had two or more pipelines needing these values, you could make a parameter of type object that’d take in the JSON. Child pipelines would then access its values using dot notation. That way, you don’t need a parameter for every value, you just need one for the object. That being said, I still think this is too much effort for mirroring the reusability provided by functions, or even global variables, in a standard programming language.

Option 3: You store reusable expression code in a text file maintained in your Azure Storage Account. Every time you need an oft-used piece of logic, you read it from the file using a Lookup activity. You then get the output of the Lookup and pass it in as the dynamic content for the activity that needs the logic. By doing so, you only have to write the expression code once for all places that need it.

I call this one bastardized reusability. I think it’s a bad idea. I feel wrong for having typed it. I’m sorry Microsoft, but you should just give us global parameters in Synapse and the ability to return values from pipelines so we can stop coming up with creative, although sometimes inefficient, workarounds.

Update: In February of 2023 the Azure Data Factory team released a feature that lets you return values from pipelines. The comments above complaining about not being able to do so no longer stand. You can read more about the feature here. I’d like to think someone from their team read this article and fast-tracked development on it, although that may be unlikely, a man can dream.