Note: This article was originally written for a workshop I created for computer science students, inspired by Canva and Meta allowing candidates to use AI in coding interviews. I’ve adapted it here for a general audience. Check out the Workshop Website, GitHub, and Slide Deck.

Times Are Changing

AI has fundamentally changed what it means to be a productive software engineer. The question we’re all asking right now across the industry is “when AI writes almost all code, what happens to software engineering?”

There has to be something of value, of high skill, that justifies developer salaries. Something beyond writing code from scratch by hand. Most people assume that’s all software engineers do, but that wasn’t true before AI, and it isn’t true now.

So what’s left for engineers to do? The answer is mostly the same as it’s been in the many attempts to get rid of developers in the past.

Can you drive the software development life cycle end to end, ship something correct, and then maintain, enhance, and monitor it for the foreseeable future?”

That’s what actually matters.

I work at a company you’ve likely heard of and use GitHub Copilot in its various forms every day. I’ve done so since 2021, when it first hit the scene as a mediocre coding assistant that was little more than fancy auto complete. Since then, my goodness has it had a glow up. It’s far from mediocre, and I wouldn’t want to go back to a world working without it.

What I do most of the time now amounts to natural language programming. I describe what I want in jargon-laden technical prose, refine what AI generates, verify correctness, and integrate it into larger systems. That might make you think, “Well then, what value is your technical expertise? Couldn’t a random business person just vaguely describe what they want and cut out the software engineer entirely?”

No. Matheus Lima explains why well in AI Can Write Your Code. It Can’t Do Your Job.

Technical expertise is still essential across the entire software development life cycle, beyond the code itself. Understanding systems architecture, debugging weird edge cases, knowing when AI is confidently wrong, designing systems that scale, reviewing and validating AI-generated code, navigating organizational politics, aligning stakeholders, making build-vs-buy decisions with incomplete information, owning accountability when things fail in production, planning, testing, integration, DevOps, FinOps, security. That’s where the real skill lives.

Matteo Collina, maintainer of Node.js, Fastify, Pino, and Undici, and Chair of the Node.js Technical Steering Committee, captures this well in The Human in the Loop:

I provide judgment. I decide what should be built, how it should behave, and whether the implementation matches the intent. I catch the cases where the AI confidently produces something that looks right but isn’t. This isn’t a new skill. It’s the same skill senior engineers have always had. The difference is that now it’s the primary skill, not one of many.

When I ship code, my name is on it. When there’s a security vulnerability in Undici or a bug in Fastify, it’s my responsibility. I can use AI to help me move faster, but I cannot outsource my judgment. I cannot outsource my accountability.

Your Value Lies Beyond the Code

Nicholas Zakas, creator of ESLint, argues in From Coder to Orchestrator that the role is shifting from writing code to orchestrating systems. The skills that matter now are problem decomposition, validation, context management, and knowing when AI is wrong.

This isn’t speculation. Boris Cherny, lead developer of Claude Code at Anthropic, put it bluntly: “In the last thirty days, 100% of my contributions to Claude Code were written by Claude Code.”

But that shouldn’t scare you. Check out the OpenAI and Anthropic careers pages.

Anything with the word “Engineer” in it is a technical role. If AI is so capable, why are these companies still hiring technical staff? What would those engineers be doing that OpenAI Codex or Claude Code can’t handle with Sam Altman or Dario Amodei simply describing what they want?

Well, this is a tale as old as time. A few years ago, a new grad posted on Reddit, confused about why senior engineers spend so little time coding. They wanted to know, what are all these meetings about? What do staff and principal engineers actually do if not write code?

The top comment sums it up:

Because the real value in this profession isn’t writing code. It’s determining what code to write.

And the top reply to that comment as well:

and a bunch of clowns, who are screaming that AI will take our jobs, don’t realize this. They think we are monkeys mashing our keyboard all day. I welcome AI to do all my coding or 90% of it so I can focus on more critical stuff.

A text-generating non-deterministic probability engine isn’t going to cut it at enterprise scale when all it has to go on is “make it faster,” “please fix all the security,” “make it compliant so we don’t get sued,” or “make it look better, the customers said it was ugly.”

Why LLMs Can’t Replace You (Yet)

I want to be clear about the limitations here. LLMs are powerful tools, but they have fundamental constraints that matter for real engineering work.

Zed’s article, Why LLMs Can’t Build Software, nails it. LLMs can generate code that looks right, but they can’t maintain mental models, verify their own reasoning, or truly understand the system they’re building into. You still need humans who hold context across codebases, reason about edge cases, and make judgment calls.

Simon Willison, co-creator of Django, and a highly respected voice in the developer community these days regarding AI, makes a related point in Your Job Is To Deliver Code You Have Proven to Work.

Almost anyone can prompt an LLM to generate a thousand-line patch and submit it for code review. That’s no longer valuable. What’s valuable is contributing code that is proven to work.

Bridging the gap between ‘AI generated this’ and ‘this is production-ready’ is still a human job. Owning the outcome. When it breaks at 2 AM, ‘the AI wrote it’ isn’t an answer. You signed off on it.

Remember, a computer can never be held accountable, therefore a computer must never make a management decision. That’s your job. That’s what separates a developer from a vibe coder someone who just prompts AI and hopes for the best.

Is It Vibe Coding or Vibe Engineering?

There’s been a lot of buzz around vibe coding, a term coined by Andrej Karpathy, an OpenAI co-founder and technical heavyweight in the Machine Learning world. It refers to the fast and loose approach where you prompt AI and accept whatever comes out if it seems to work. Ironically, the term was coined by someone as technical as it gets.

Simon Willison coined a counterpart he calls vibe engineering, which he admits sounds a bit silly. It’s working with AI tools while staying “proudly and confidently accountable for the software you produce.”

The distinction matters. Vibe coding is fine for a lot of things. Even Linus Torvalds, creator of Linux and Git, is vibe coding these days. If he’s doing it, it’s clearly not beneath anyone. But for anything sufficiently serious, you shouldn’t even try it (and he says as much).

Willison’s concept of vibe engineering is more appropriate for production software, or anything you actually need to work and maintain. That means writing (or having AI write at your explicit direction) automated tests, planning before implementing, maintaining good version control habits, developing strong code review skills, and building the ability to recognize when AI is wrong.

As Willison puts it:

AI tools amplify existing expertise. The more skills and experience you have as a software engineer the faster and better the results you can get from working with LLMs and coding agents.

The Illusion of Vibe Coding

This section’s title is pulled from a great article by Frantisek Lucivjansky I think you should read: The Illusion of Vibe Coding: There Are No Shortcuts to Mastery.

I’ll summarize. If you skip the hard work of actually understanding how code works, you will hit a wall. AI can generate code, but you need a technical foundation to debug it, extend it, and fix it when things break. And things always break. Always.

Joel Spolsky, co-founder of Stack Overflow and Trello, wrote about this dynamic back in 2000, long before AI coding existed. In Things You Should Never Do, Part I, he laid out what he called a “cardinal, fundamental law of programming”:

It’s harder to read code than to write it. This is why code reuse is so hard. This is why everybody on your team has a different function they like to use for splitting strings into arrays of strings. They write their own function because it’s easier and more fun than figuring out how the old function works.

It’s also why vibe coding falls apart at scale. When things break, you can paste the error back to AI. And when that fix breaks, paste again. But that’s a loop, not engineering. Context windows fill up. Context rot sets in. At some point, a human has to actually diagnose the problem. AI can help you fix it once you know what’s wrong. But you have to know what’s wrong.

Spolsky was writing about Netscape, the browser that dominated before Internet Explorer, which dominated before Chrome, but it still applies today. His article was about why companies shouldn’t rewrite codebases from scratch, but the lesson transfers perfectly to AI-generated code. Reading and maintaining code is the hard part. That’s where the skill lives.

This isn’t just opinion. Anthropic, the company behind Claude, published research confirming this.

In How AI Assistance Impacts the Formation of Coding Skills, they found that developers who offloaded their thinking to AI scored nearly two letter grades lower on comprehension tests. The largest gap was in debugging, the very skill you need most when AI-generated code breaks. But developers who used AI while still asking conceptual questions and building understanding scored just as well. The tool isn’t the problem. Mindless delegation is. This Reddit discussion about the research is worth reading too.

Here’s some insightful comments from that thread:

I’m not gonna lie, using AI is like a performance-enhancing drug for the brain. But it also helps me realize when I should independently spike and research, because it’s constantly making up sh*t that SHOULD work but just ain’t so. Human + AI is best, but juniors probably shouldn’t be using it, in much the same way that teenagers should not be drinking alcohol. Many will still be using occasionally, but not having good boundaries around it means you’re one big AWS outage away from having half your brain ripped out.

There’s an important caveat here: “However, some in the AI group still scored highly [on the comprehension test] while using AI assistance. When we looked at the ways they completed the task, we saw they asked conceptual and clarifying questions to understand the code they were working with—rather than delegating or relying on AI.” As usual, it all depends on you. Use AI if you wish, but be mindful about it.

The article is weird. It seems to say that in general across all professions, there are significant productivity gains. But for software development specifically, the gains don’t really materialize because developers who rely entirely on AI don’t actually learn the concepts - and as a result, productivity gains in the actual writing of the code are all lost by reduced productivity in debugging, code reading, and understanding the actual code. Which, honestly, aligns perfectly with my own real life perception. There are definitely times where AI saves me hours of work. There are also times where it definitely costs me hours in other aspects of the project.

So, you still need computer science fundamentals. Pick a language (I’d suggest Python) and invest time in understanding how programming, databases, operating systems, distributed systems, networking, and software actually work.

If you want a great resource for building that foundation, check out From Nand to Tetris, which is also on Coursera. It’s a project that teaches you how a general-purpose computer and its software function from the ground up. AI can’t accelerate you in real-world software engineering if you’re useless without it. Build the foundation first.

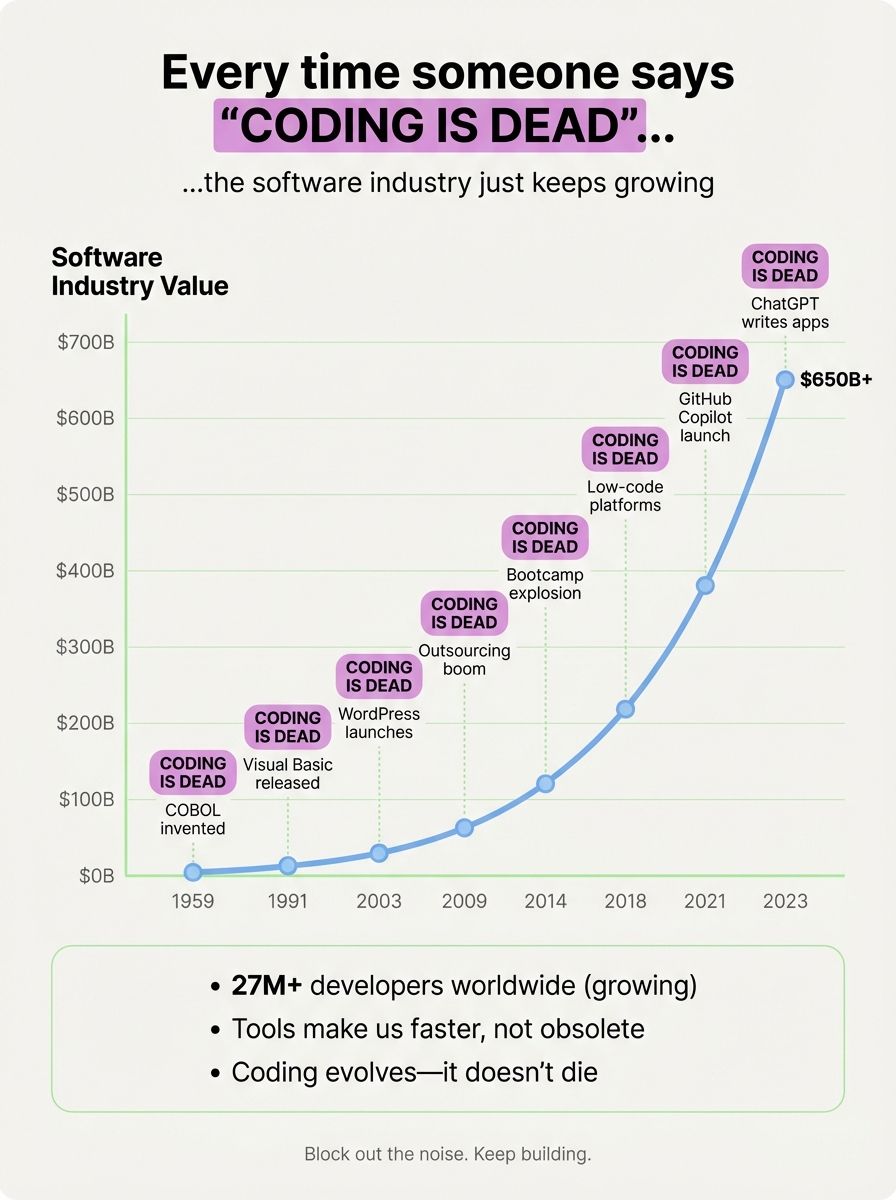

A Historical Perspective

Here’s a historical perspective that I find grounding. Stephan Schwab wrote Why We’ve Tried to Replace Developers Every Decade Since 1969, which does an excellent job showing how long before AI coding became a thing, people have been trying to get rid of developers.

This graphic by Kedasha Kerr on LinkedIn pairs perfectly with that article.

Did you know that business people in the 60s thought COBOL would let them fire all their developers? COBOL! The Common Business-Oriented Language. They believed it would let them plainly describe what they want and thus build software without software developers. How’d that turn out?

We’ve been here before. Every few decades, someone claims that a new technology will eliminate the need for developers. None of these predictions came true because the fundamental complexity of software development cannot be abstracted away. The complexity just moves.

This doesn’t mean AI isn’t transformative. It is. But it’s transformative in the way that power tools are for carpenters, or calculators for accountants. For anything actually important, and sufficiently complex, you still need to know what you’re doing.

The Junior Developer Question

One of the hottest topics in tech circles these days is “will AI kill entry-level software developer jobs?” It’s a fair concern given headlines about job market struggles and declining internship opportunities.

I think junior roles will survive, but this is a raging debate. We have job data, but it’s hard to tell if AI is actually doing the work or if companies are just pouring CapEx into data centers instead of hiring.

For a balanced overview of where things stand, start with Addy Osmani’s (a Software Engineer at Google working on GCP and Gemini) piece: The Next Two Years of Software Engineering

From there, here’s a collection of articles from people who have thought deeply about this. Read them and form your own opinion.

The case that juniors can thrive:

- Kent Beck - The Bet On Juniors Just Got Better

- Andrew Ng - Why You Should Learn to Code and not Fear AI.

- Andrew Ng - An 18 Year Old’s Dilemma: Too Late to Contribute to AI?

- GitHub’s Blog (Gwen Davis) - Junior Developers Aren’t Obsolete: Here’s How To Thrive in the Age of AI

- Time Magazine (Marcus Fontoura) - You Should Still Study Tech — Even if AI Replaces Entry Tech Jobs

- Thomas Dohmke - Developers, Reinvented

- Nicholas C. Zakas - From Coder to Orchestrator: The Future of Software Engineering With AI

- Matteo Collina - The Human in the Loop

- Latent Space - The Rise of the AI Engineer

The case that things are getting harder (or…it’s over) for juniors:

- Stack Overflow Blog (Phoebe Sajor) - AI vs Gen Z: How AI Has Changed the Career Pathway for Junior Developers

- Michael Arnaldi - The Death of Software Development

- Kaustubh Saini - 9 Entry-Level Jobs Are Disappearing Fast Because of AI

- Time Magazine (Luke Drago and Rudolf Laine) - What Happens When AI Replaces Workers?

- Namanyay Goel - New Junior Developers Can’t Actually Code

- Fortune (Chris Morris) - Anthropic CEO Warns AI Could Eliminate Half of All Entry-Level White-Collar Jobs

Should I Still Grind LeetCode?

Yes. Not because it makes sense, not because you’ll ever solve clean and contained LeetCode style problems at work, but because companies are still asking those questions.

Even though AI can solve most all LeetCode problems instantly, companies still use these questions as a baseline filter. Can you think algorithmically at all? Do you understand time and space complexity? Can you reason about tradeoffs?

LeetCode is an imperfect proxy for these things, but it’s the proxy most companies still use. Until the industry catches up to the reality of AI-assisted coding interviews (like those offered by Canva and Meta), you have to play the game.

Here are the best resources for grinding effectively.

- The Tech Interview Handbook - The best “hand hold me through this” guide online.

- The Blind 75 - For a structured “what problems should I focus on” plan that’s been curated over years by the tech community.

- The Grind 75 - From the makers of the Tech Interview Handbook, a customizable approach to The Blind 75.

- The Neetcode 150 - The Blind 75 but with 75 more problems to really go hard.

- The System Design Primer - For everything you need to pass system design interview rounds.

Some Companies Are Letting Candidates Use AI In Coding Interviews

While LeetCode is still a thing, there are some companies catching on to the fact that with AI, it no longer makes sense to ask those questions. It doesn’t reflect what a person would be doing at work if they were hired. Check these out.

- Canva - Yes, You Can Use AI in Our Interviews. In Fact, We Insist

- Wired - Meta Is Going to Let Job Candidates Use AI During Coding Tests

- Reddit Testimonial - Canva’s AI Assisted Coding Interview

- Anna J. McDougall - You Can’t Outrun AI in Tech Interviews, So We Designed Around It

Some common themes across these new AI-assisted interviews are:

- They fully expect you to use AI. Copy/paste the problem into the chat window. They want to see what you’d actually do at work.

- You’re still expected to sit in the driver’s seat. AI is a copilot.

- You still own the plan, the tradeoffs, the correctness, and the proof.

- You will need to explain your thinking out loud. The solution AI built, the time and space complexity, what prompts you’re writing next and why. If you type “hey do all this work I have no idea what I’m doing” that’s a clear no hire signal. If you’re writing intelligent prompts that provide guidance, direction, and push back, that’s a pilot using a copilot effectively.

In Conclusion

The industry is still figuring things out, but the direction is clear. Coding as a task is being automated. It’s gone fam. If you want to write code by hand all day as a career, it’s over. But software engineering as a discipline, the judgment, the systems thinking, the verification, that’s becoming more valuable, not less.

Furthermore, I think people should read Latent Space’s AI Engineer article. If you’re even a little bit technical and know how to tinker and put things together, you can do amazing things quickly with AI, even if you’re no good at LeetCode. Just look at Moltbook, fully made by a vibe coder with plenty of security concerns to go with it.

Don’t fight the AI wave. You won’t win. There’s a new divide forming. Adapt or die.